Earlier this year I wrote about how to mimic the “always-on” feature even in a free Azure Website, by running a custom webjob that periodically would request the site, thus keeping it in memory and always fast and responsive.

A friendly reader noticed me last week that my original solution is no longer working, as Microsoft indeed doesn’t let the site stay in memory even if it frequently receives client requests. I tested that over the last week and it’s indeed true, now only requests to the site from the Azure portal or from the Kudu (scm) interface will keep it in memory. What a bummer.

But fear not, I have made a new webjob, that will enable you to keep your free Azure web site in memory. I have had it running for 3 days without interruptions now, so it works exactly as my first web job did when I released it.

The solution is simple, instead of requesting a public available page in our site, you just periodically request the kudu/scm portal with your username and password. This way you trick Azure into thinking that you are accessing the portal, and that will stop it from unloading your site.

The code looks like this

[csharp]

using System;

using System.Collections.Generic;

using System.Configuration;

using System.Diagnostics;

using System.Linq;

using System.Net;

using System.Net.Http;

using System.Text;

using System.Threading.Tasks;

namespace SJKP.AzureKeepWarm

{

class Program

{

static void Main(string[] args)

{

var runner = new Runner();

var siteUrl = ConfigurationManager.AppSettings["SiteUrl"];

var waitTime = int.Parse(ConfigurationManager.AppSettings["WaitTime"]);

Task.WaitAll(runner.HitSite(siteUrl,waitTime));

}

private class Runner

{

private HttpClient client = new HttpClient(new HttpClientHandler() { Credentials = new NetworkCredential([Your-WebsiteName], [YourPassWord]) });

public async Task HitSite(string siteUrl, int waitTime)

{

while (true)

{

try

{

var request = await client.GetAsync(new Uri(siteUrl));

Trace.TraceInformation("{0}: {1}", DateTime.Now, request.StatusCode);

}

catch (Exception ex)

{

Trace.TraceError(ex.ToString());

}

await Task.Delay(waitTime * 1000);

}

}

}

}

}

[/csharp]

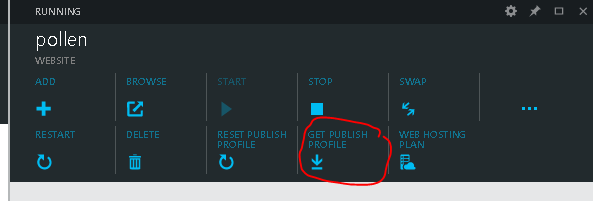

In the above script you have to change the [Your-WebsiteName] to the name of your website, prepended with $. So in my case, my website is named statsofpoe, my name becomes $statsofpoe. The [YourPassWord] needs to be replaced with the password you find in your publishing file

, where you take the value of userPWD.

Finally you need to change the appsetting to value of SiteUrl to point to the https://statsofpoe.scm.azurewebsites.net/azurejobs/#/jobs/continuous/keepwarm.

That’s it now you have a free Azure Website that is as responsive as a paid one with the always-on feature enabled.